Cuckoo search

Cuckoo search (CS) is an optimization algorithm developed by Xin-she Yang and Suash Deb in 2009.[1][2] It was inspired by the obligate brood parasitism of some cuckoo species by laying their eggs in the nests of other host birds (of other species). Some host birds can engage direct conflict with the intruding cuckoos. For example, if a host bird discovers the eggs are not their own, it will either throw these alien eggs away or simply abandon its nest and build a new nest elsewhere. Some cuckoo species such as the New World brood-parasitic Tapera have evolved in such a way that female parasitic cuckoos are often very specialized in the mimicry in colors and pattern of the eggs of a few chosen host species [3]

Cuckoo search idealized such breeding behavior, and thus can be applied for various optimization problems. It seems that it can outperform other metaheuristic algorithms in applications.[4]

Another seemingly unrelated algorithm for a completely different area of applications is called cuckoo hashing which was developed by Rasmus Pagh and Fleming Friche Rodler in 2001.[5]

Contents |

Cuckoo search

Cuckoo search (CS) uses the following representations:

Each egg in a nest represents a solution, and a cuckoo egg represents a new solution. The aim is to use the new and potentially better solutions (cuckoos) to replace a not-so-good solution in the nests. In the simplest form, each nest has one egg. The algorithm can be extended to more complicated cases in which each nest has multiple eggs representing a set of solutions.

CS is based on three idealized rules:

- Each cuckoo lays one egg at a time, and dumps its egg in a randomly chosen nest;

- The best nests with high quality of eggs will carry over to the next generation;

- The number of available hosts nests is fixed, and the egg laid by a cuckoo is discovered by the host bird with a probability

. Discovering operate on some set of worst nests, and discovered solutions dumped from farther calculations.

. Discovering operate on some set of worst nests, and discovered solutions dumped from farther calculations.

In addition, Yang and Deb discovered that the random-walk style search is better performed by Lévy flights rather than simple random walk.

The pseudo-code can be summarized as:

Objective function:Generate an initial population of

host nests; While (t<MaxGeneration) or (stop criterion) Get a cuckoo randomly (say, i) and replace its solution by performing Lévy flights; Evaluate its quality/fitness

[For maximization,

]; Choose a nest among n (say, j) randomly; if (

), Replace j by the new solution; end if A fraction (

) of the worse nests are abandoned and new ones are built; Keep the best solutions/nests; Rank the solutions/nests and find the current best; Pass the current best solutions to the next generation; end while Post-processing the results and visualization;

An important advantage of this algorithm is its simplicity. In fact, comparing with other population- or agent-based metaheuristic algorithms such as particle swarm optimization and harmony search, there is essentially only a single parameter  in CS (apart from the population size

in CS (apart from the population size  ). Therefore, it is very easy to implement.

). Therefore, it is very easy to implement.

Random Walks and the Step Size

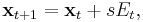

An important issue is the applications of Levy flights and random walks in the generic equation for generating new solutions

where  is drawn from a standard normal distribution with zero mean and unity standard deviation for random walks, or drawn from Levy distribution for Levy flights. Obviously, the random walks can also be linked with the similarity between a cuckoo's egg and the host's egg which can be tricky in implementation. Here the step size

is drawn from a standard normal distribution with zero mean and unity standard deviation for random walks, or drawn from Levy distribution for Levy flights. Obviously, the random walks can also be linked with the similarity between a cuckoo's egg and the host's egg which can be tricky in implementation. Here the step size  determines how far a random walker can go for a fixed number of iterations. The generation of Levy step size is often tricky, and a good algorithm is Mantegna's algorithm.[6]

determines how far a random walker can go for a fixed number of iterations. The generation of Levy step size is often tricky, and a good algorithm is Mantegna's algorithm.[6]

If s is too large, then the new solution generated will be too far away from the old solution (or even jump out side of the bounds). Then, such a move is unlikely to be accepted. If s is too small, the change is too small to be significant, and consequently such search is not efficient. So a proper step size is important to maintain the search as efficient as possible.

As an example, for simple isotropic random walks, we know that the average distance  traveled in the d-dimension space is

traveled in the d-dimension space is

where  is the effective diffusion coefficient. Here

is the effective diffusion coefficient. Here  is the step size or distance traveled at each jump, and

is the step size or distance traveled at each jump, and  is the time taken for each jump. The above equation implies that[7]

is the time taken for each jump. The above equation implies that[7]

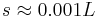

For a typical length scale L of a dimension of interest, the local search is typically limited in a region of  . For

. For  and t=100 to 1000, we have

and t=100 to 1000, we have  for d=1, and

for d=1, and  for d=10. Therefore, we can use s/L=0.001 to 0.01 for most problems. Though the exact derivation may require detailed analysis of the behaviour of Levy flights.[8]

for d=10. Therefore, we can use s/L=0.001 to 0.01 for most problems. Though the exact derivation may require detailed analysis of the behaviour of Levy flights.[8]

Algorithm and convergence analysis will be fruitful, because there are many open problems related to metaheuristics[9]

Implementation

The pseudo code was given in a sequential form, but Yang and Deb provides a vectorized implementation which is very efficient.[10] In the real world, if a cuckoo's egg is very similar to a host's eggs, then this cuckoo's egg is less likely to be discovered, thus the fitness should be related to the difference in solutions. Therefore, it is a good idea to do a random walk in a biased way with some random step sizes. For Matlab implementation, this biased random walk can partly be achieved by

- stepsize=rand*(nest(randperm(n),:)-nest(randperm(n),:));

- new_nest=nest+stepsize.*K;

where K=rand(size(nest))>pa and pa is the discovery rate. A standard CS matlab can be found here [1]

An object-oriented software implementation of cuckoo search was provided by Bacanin[11] On the other hand, a modified cuckoo search algorithm is also implemented for unconstrained optimization problems.[12]

An open source implementation of Modified Cuckoo Search can be found here http://code.google.com/p/modified-cs/

Modified Cuckoo Search

A modification of the standard Cuckoo Search was made by Walton et al.[13] with the aim to speed up convergence. The modification involves the additional step of information exchange between the top eggs. It was shown that Modified Cuckoo Search (MCS) outperforms the standard cuckoo search and other algorithms, in terms of convergence rates, when applied to a series of standard optimization benchmark objective functions.

Subsequently, the modified cuckoo search has been applied to optimize unstructured mesh which reduces computational cost significantly.[14] In addition, another interesting improvement to cuckoo search is the so-called quantum-inspired cuckoo search with convincing results [15]

Multiobjective Cuckoo Search (MOCS)

A multiobjective cuckoo search (MOCS) method has been formulated to deal with multi-criteria optimization problems.[16] This approach uses random weights to combine multiple objectives to a single objective. As the weights vary randomly, Pareto fronts can be found so the points can distributed diversely over the fronts.

Hybridization

Though cuckoo search is a swarm-intelligence-based algorithm, it can be still hybridized with other swarm-based algorithms such as PSO. For example, a hybrid CS-PSO algorithm seems to remedy the defect of PSO.[17]

Applications

The applications of Cuckoo Search into engineering optimization problems have shown its promising efficiency. For example, for both spring design and welded beam design problems, CS obtained better solutions than existing solutions in literature. A promising discrete cuckoo search algorithm is recently proposed to solve nurse scheduling problem.[18] An efficient computation approach based on cuckoo search has been proposed for data fusion in wireless sensor networks.[19][20] A new quantum-inspired cuckoo search was developed to solve Knapsack problems, which shows its effectiveness.[21]

A conceptual comparison of the cuckoo search with PSO, DE and ABC suggest that CS and DE algorithms provide more robust results than PSO and ABC.[22] An extensive detailed study of various structural optimization problems suggests that cuckoo search obtains better results than other algorithms.[23] In addition, a new software testing approach has been developed based on cuckoo search.[24] In addition, cuckoo search is particularly suitable for large scale problems, as shown in a recent study.[25] Cuckoo search has been applied to train neural networks with improved performance.[26] Furthermore, CS is successfully applied to train spiking neural models.[27] Cuckoo search has also been used to optimize web service composition process and planning graphs. [28]

Cuckoo search is a reliable approach for embedded system design[29] and design optimization.[30] An interesting application of cuckoo search is to solve boundary value problems.[31]

References

- ^ X.-S. Yang; S. Deb (December 2009). "Cuckoo search via Lévy flights". World Congress on Nature & Biologically Inspired Computing (NaBIC 2009). IEEE Publications. pp. 210–214. arXiv:1003.1594v1.

- ^ Inderscience (27 May 2010). "Cuckoo designs spring". Alphagalileo.org. http://www.alphagalileo.org/ViewItem.aspx?ItemId=76985&CultureCode=en. Retrieved 2010-05-27.

- ^ R. B. Payne, M. D. Sorenson, and K. Klitz, The Cuckoos, Oxford University Press, (2005).

- ^ http://www.scientificcomputing.com/news-DA-Novel-Cuckoo-Search-Algorithm-Beats-Particle-Swarm-Optimization-060110.aspx

- ^ R. Pagh and F. F. Rodler, Flemming Friche (2001), Cuckoo Hashing. doi:10.1.1.25.4189. http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.25.4189.

- ^ R. N. Mantegna, Fast, accurate algorithm for numerical simulation of Levy stable stochastic processes, Physical Review E, Vol.49, 4677-4683 (1994).

- ^ X.-S. Yang, Nature-Inspired Metaheuristic Algorithms, 2nd Edition, Luniver Press, (2010).

- ^ M. Gutowski, Levy flights as an underlying mechanism for global optimization algorithms, ArXiv Mathematical Physics e-Prints, June, (2001).

- ^ X. S. Yang, Metaheuristic optimization: algorithm analysis and open problems, in: Experimental Algorithms (SEA2011), Eds (P. M. Pardalos and S. Rebennack), LNCS 6630, pp.21-32 (2011).

- ^ X.-S. Yang and S. Deb, "Engineering optimisation by cuckoo search", Int. J. Mathematical Modelling and Numerical Optimisation", Vol. 1, No. 4, 330-343 (2010). http://arxiv.org/abs/1005.2908

- ^ N. Bacanin, An object-oriented software implementation of a novel cuckoo search algorithm, Proc. of the 5th European Conference on European Computing Conference (ECC'11), pp. 245-250 (2011).

- ^ M. Tuba, M. Subotic, and N. Stanarevic, Modified cuckoo search algorithm for unconstrained optimization problems, Proc. of the 5th European Conference on European Computing Conference (ECC'11), pp. 263-268 (2011).

- ^ S. Walton; O. Hassan, K. Morgan and M.R. Brown (30 June 2011). "Modified cuckoo search: A new gradient free optimisation algorithm". Chaos, Solitons & Fractals. doi:10.1016/j.chaos.2011.06.004.

- ^ S. Walton, O. Hassan, K. Morgan, Using proper orthogonal decomposition to reduce the order ot optimization problems, in: Proc. 16th Int. Conf. on Finite Elments in Flow Problems (Eds. Wall W.A. and Gvravemeier V.), Munich, p.90 (2011).

- ^ A. Layeb, A novel quantum inspired cuckoo search for knapsack problems, Int. J. Bio-Inspired Computation, Vol. 3, 297-305 (2011).

- ^ X. S. Yang and S. Deb, Multiobjective cuckoo search for design optimization, Computers and Operations Research, October (2011). doi:10.1016/j.cor.2011.09.026

- ^ F. Wang, L. Lou, X. He, Y. Wang, Hybrid optimization algorithm of PSO and Cuckoo Search, in: Proc. of 2nd Int. Conference on Artificial Intelligence, Management Science and Electronic Commerce (AIMSEC'11), pp. 1172-1175 (2011).

- ^ Tein L. H. and Ramli R., Recent advancements of nurse scheduling models and a potential path, in: Proc. 6th IMT-GT Conference on Mathematics, Statistics and its Applications (ICMSA 2010), pp. 395-409 (2010). http://research.utar.edu.my/CMS/ICMSA2010/ICMSA2010_Proceedings/files/statistics/ST-Lim.pdf

- ^ M. Dhivya, M. Sundarambal, L. N. Anand, Energy Efficient Computation of Data Fusion in Wireless Sensor Networks Using Cuckoo Based Particle Approach (CBPA), Int. J. of Communications, Network and System Sciences, Vol. 4, No. 4, 249-255 (2011).

- ^ M. Dhivya and M. Sundarambal, Cuckoo search for data gathering in wireless sensor networks,Int. J. Mobile Communications, Vol. 9, pp. 642-656 (2011).

- ^ A. Layeb, A novel quantum-inspired cuckoo search for Knapsack problems, Int. J. Bio-inspired Computation, Vol. 4, (2011).

- ^ P. Civicioglu and E. Besdok, A conception comparison of the cuckoo search, particle swarm optimization, differential evolution and artificial bee colony algorithms, Artificial Intelligence Review, DOI 10.1007/s10462-011-92760, 6 July (2011).

- ^ A. H. Gandomi, X. S. Yang, A. H. Alavi, Cuckoo search algorithm: a metaheuristic approach to solve structural optimization problems,Engineering with Computers, Vol. 27, July (2011).

- ^ K. Choudhary and G. N. Purohit, A new testing approach using cuckoo search to achieve multi-objective genetic algorithm, J. of Computing, Vol. 3, No. 4, 117-119 (2011).

- ^ E. R. Speed, Evolving a Mario agent using cuckoo search and softmax heuristics, Games Innovations Conference (ICE-GIC), pp. 1-7 (2010). DOI:10.1109/ICEGIC.2010.5716893

- ^ E. Valian, S. Mohanna and S. Tavakoli, Improved cuckoo search algorithm for feedforward neural network training, Int. J. Articial Intelligence and Applications, Vol. 2, No. 3, 36-43(2011).

- ^ R. A. Vazquez, Training spiking neural models using cuckoo search algorithm, 2011 IEEE Congress on Eovlutionary Computation (CEC'11), pp.679-686 (2011).

- ^ V. R. Chifu, C. B. Pop, I. Salomie, D> S. Suia and A. N. Niculici, Optimizing the semantic web service composition process using cuckoo search, in: Intelligent Distributed Computing V, Studies in Computational Intelligence, Vol. 382, pp. 93-102 (2012).

- ^ A. Kumar and S. Chakarverty, Design optimization for reliable embedded system using Cuckoo Search,in: Proc. of 3rd Int. Conference on Electronics Computer Technology (ICECT2011), pp. 564-268 (2011).

- ^ A. Kumar and S. Chakarverty, Design optimization using genetic algorithm and Cuckoo Search, in: Proc. of IEEE Int. Conference on Electro/Information Technology (EIT), pp. 1-5 (2011).

- ^ A. Goghrehabadi, M. Ghalambaz and A. Vosough, A hybrid power series -- Cuckoo search optimization algorithm to electrostatic deflection of micro fixed-fixed actuators, Int. J. Multidisciplinary Science and Engineering, Vol. 2, No. 4,22-26 (2011).

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||